如何定义一个总结评估器

有些指标只能在整个实验层面定义,而不是在实验的单个运行中定义。例如,您可能希望计算评估目标在数据集中所有示例上的总体通过率或 F1 分数。这些被称为 summary_evaluators。

基本示例

在这里,我们将计算 F1 分数,它是精确率和召回率的组合。

这类指标只能在实验的所有示例上计算,因此我们的评估器接受一个输出列表和一个参考输出列表。

- Python

- TypeScript

def f1_score_summary_evaluator(outputs: list[dict], reference_outputs: list[dict]) -> dict:

true_positives = 0

false_positives = 0

false_negatives = 0

for output_dict, reference_output_dict in zip(outputs, reference_outputs):

output = output_dict["class"]

reference_output = reference_output_dict["class"]

if output == "Toxic" and reference_output == "Toxic":

true_positives += 1

elif output == "Toxic" and reference_output == "Not toxic":

false_positives += 1

elif output == "Not toxic" and reference_output == "Toxic":

false_negatives += 1

if true_positives == 0:

return {"key": "f1_score", "score": 0.0}

precision = true_positives / (true_positives + false_positives)

recall = true_positives / (true_positives + false_negatives)

f1_score = 2 * (precision * recall) / (precision + recall)

return {"key": "f1_score", "score": f1_score}

function f1ScoreSummaryEvaluator({ outputs, referenceOutputs }: { outputs: Record<string, any>[], referenceOutputs: Record<string, any>[] }) {

let truePositives = 0;

let falsePositives = 0;

let falseNegatives = 0;

for (let i = 0; i < outputs.length; i++) {

const output = outputs[i]["class"];

const referenceOutput = referenceOutputs[i]["class"];

if (output === "Toxic" && referenceOutput === "Toxic") {

truePositives += 1;

} else if (output === "Toxic" && referenceOutput === "Not toxic") {

falsePositives += 1;

} else if (output === "Not toxic" && referenceOutput === "Toxic") {

falseNegatives += 1;

}

}

if (truePositives === 0) {

return { key: "f1_score", score: 0.0 };

}

const precision = truePositives / (truePositives + falsePositives);

const recall = truePositives / (truePositives + falseNegatives);

const f1Score = 2 * (precision * recall) / (precision + recall);

return { key: "f1_score", score: f1Score };

}

然后,您可以如下将此评估器传递给 evaluate 方法

- Python

- TypeScript

from langsmith import Client

ls_client = Client()

dataset = ls_client.clone_public_dataset(

"https://smith.langchain.com/public/3d6831e6-1680-4c88-94df-618c8e01fc55/d"

)

def bad_classifier(inputs: dict) -> dict:

return {"class": "Not toxic"}

def correct(outputs: dict, reference_outputs: dict) -> bool:

"""Row-level correctness evaluator."""

return outputs["class"] == reference_outputs["label"]

results = ls_client.evaluate(

bad_classified,

data=dataset,

evaluators=[correct],

summary_evaluators=[pass_50],

)

import { Client } from "langsmith";

import { evaluate } from "langsmith/evaluation";

import type { EvaluationResult } from "langsmith/evaluation";

const client = new Client();

const datasetName = "Toxic queries";

const dataset = await client.clonePublicDataset(

"https://smith.langchain.com/public/3d6831e6-1680-4c88-94df-618c8e01fc55/d",

{ datasetName: datasetName }

);

function correct({ outputs, referenceOutputs }: { outputs: Record<string, any>, referenceOutputs?: Record<string, any> }): EvaluationResult {

const score = outputs["class"] === referenceOutputs?["label"];

return { key: "correct", score };

}

function badClassifier(inputs: Record<string, any>): { class: string } {

return { class: "Not toxic" };

}

await evaluate(badClassifier, {

data: datasetName,

evaluators: [correct],

summaryEvaluators: [summaryEval],

experimentPrefix: "Toxic Queries",

});

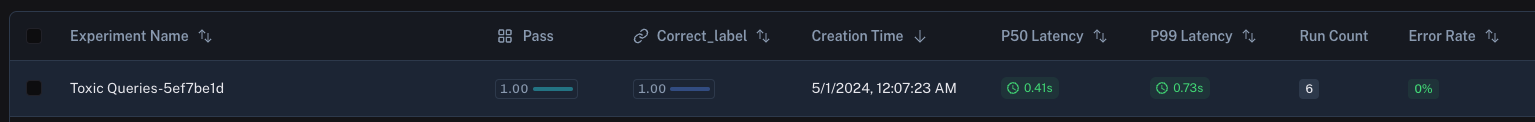

在 LangSmith UI 中,您会看到总结评估器的分数以及相应的键。

总结评估器参数

总结评估器函数必须具有特定的参数名称。它们可以接受以下参数的任意子集

inputs: list[dict]: 对应数据集中单个示例的输入列表。outputs: list[dict]: 每个实验根据给定输入生成的字典输出列表。reference_outputs/referenceOutputs: list[dict]: 与示例关联的参考输出列表,如果可用。runs: list[Run]: 两个实验在给定示例上生成的完整 运行(Run) 对象列表。如果您需要访问每个运行的中间步骤或元数据,请使用此项。examples: list[Example]: 所有数据集 示例(Example) 对象,包括示例输入、输出(如果可用)和元数据(如果可用)。

总结评估器输出

总结评估器预期返回以下类型之一

Python 和 JS/TS

dict: 格式为{"score": ..., "name": ...}的字典允许您传递数值或布尔分数和指标名称。

目前仅限 Python

int | float | bool: 这被解释为可以平均、排序等的连续指标。函数名称用作指标的名称。