对代理的新版本运行回溯测试

部署您的应用程序仅仅是持续改进过程的开始。将应用程序部署到生产环境后,您会希望通过改进提示、语言模型、工具和架构来优化系统。回溯测试涉及使用历史数据评估应用程序的新版本,并将新输出与原始输出进行比较。与使用预生产数据集进行的评估相比,回溯测试能更清晰地表明应用程序的新版本是否比当前部署有所改进。

以下是回溯测试的基本步骤:

- 从您的生产追踪项目中选择样本运行进行测试。

- 将运行输入转换为数据集,并将运行输出记录为针对该数据集的初始实验。

- 在新数据集上执行您的新系统,并比较实验结果。

此过程将为您提供一个包含代表性输入的新数据集,您可以对其进行版本控制并用于模型的回溯测试。

通常,您可能没有明确的“真实”答案。在这种情况下,您可以手动标注输出,或者使用不依赖参考数据的评估器。如果您的应用程序允许捕获真实标签,例如通过允许用户留下反馈,我们强烈建议您这样做。

设置

配置环境

安装并设置环境变量。本指南要求 langsmith>=0.2.4。

为方便起见,本教程将使用 LangChain OSS 框架,但所示的 LangSmith 功能与框架无关。

pip install -U langsmith langchain langchain-anthropic langchainhub emoji

import getpass

import os

# Set the project name to whichever project you'd like to be testing against

project_name = "Tweet Writing Task"

os.environ["LANGSMITH_PROJECT"] = project_name

os.environ["LANGSMITH_TRACING"] = "true"

if not os.environ.get("LANGSMITH_API_KEY"):

os.environ["LANGSMITH_API_KEY"] = getpass.getpass("YOUR API KEY")

# Optional. You can swap OpenAI for any other tool-calling chat model.

os.environ["OPENAI_API_KEY"] = "YOUR OPENAI API KEY"

# Optional. You can swap Tavily for the free DuckDuckGo search tool if preferred.

# Get Tavily API key: https://tavily.com

os.environ["TAVILY_API_KEY"] = "YOUR TAVILY API KEY"

定义应用程序

在此示例中,我们将创建一个简单的推文撰写应用程序,该应用程序可以访问一些互联网搜索工具

from langchain.chat_models import init_chat_model

from langgraph.prebuilt import create_react_agent

from langchain_community.tools import DuckDuckGoSearchRun, TavilySearchResults

from langchain_core.rate_limiters import InMemoryRateLimiter

# We will use GPT-3.5 Turbo as the baseline and compare against GPT-4o

gpt_3_5_turbo = init_chat_model(

"gpt-3.5-turbo",

temperature=1,

configurable_fields=("model", "model_provider"),

)

# The instrucitons are passed as a system message to the agent

instructions = """You are a tweet writing assistant. Given a topic, do some research and write a relevant and engaging tweet about it.

- Use at least 3 emojis in each tweet

- The tweet should be no longer than 280 characters

- Always use the search tool to gather recent information on the tweet topic

- Write the tweet only based on the search content. Do not rely on your internal knowledge

- When relevant, link to your sources

- Make your tweet as engaging as possible"""

# Define the tools our agent can use

# If you have a higher tiered Tavily API plan you can increase this

rate_limiter = InMemoryRateLimiter(requests_per_second=0.08)

# Use DuckDuckGo if you don't have a Tavily API key:

# tools = [DuckDuckGoSearchRun(rate_limiter=rate_limiter)]

tools = [TavilySearchResults(max_results=5, rate_limiter=rate_limiter)]

agent = create_react_agent(gpt_3_5_turbo, tools=tools, state_modifier=instructions)

模拟生产数据

现在让我们模拟一些生产数据

fake_production_inputs = [

"Alan turing's early childhood",

"Economic impacts of the European Union",

"Underrated philosophers",

"History of the Roxie theater in San Francisco",

"ELI5: gravitational waves",

"The arguments for and against a parliamentary system",

"Pivotal moments in music history",

"Big ideas in programming languages",

"Big questions in biology",

"The relationship between math and reality",

"What makes someone funny",

]

agent.batch(

[{"messages": [{"role": "user", "content": content}]} for content in fake_production_inputs],

)

将生产追踪转换为实验

第一步是根据生产输入生成一个数据集。然后复制所有追踪作为基线实验。

选择要回溯测试的运行

您可以使用 list_runs 的 filter 参数选择要回溯测试的运行。filter 参数使用 LangSmith 追踪查询语法来选择运行。

from datetime import datetime, timedelta, timezone

from uuid import uuid4

from langsmith import Client

from langsmith.beta import convert_runs_to_test

# Fetch the runs we want to convert to a dataset/experiment

client = Client()

# How we are sampling runs to include in our dataset

end_time = datetime.now(tz=timezone.utc)

start_time = end_time - timedelta(days=1)

run_filter = f'and(gt(start_time, "{start_time.isoformat()}"), lt(end_time, "{end_time.isoformat()}"))'

prod_runs = list(

client.list_runs(

project_name=project_name,

is_root=True,

filter=run_filter,

)

)

将运行转换为实验

convert_runs_to_test 是一个函数,它接受一些运行并执行以下操作:

- 输入(以及可选的输出)作为示例保存到数据集中。

- 输入和输出作为实验存储,就好像您运行了

evaluate函数并收到了这些输出一样。

# Name of the dataset we want to create

dataset_name = f'{project_name}-backtesting {start_time.strftime("%Y-%m-%d")}-{end_time.strftime("%Y-%m-%d")}'

# Name of the experiment we want to create from the historical runs

baseline_experiment_name = f"prod-baseline-gpt-3.5-turbo-{str(uuid4())[:4]}"

# This converts the runs to a dataset + experiment

convert_runs_to_test(

prod_runs,

# Name of the resulting dataset

dataset_name=dataset_name,

# Whether to include the run outputs as reference/ground truth

include_outputs=False,

# Whether to include the full traces in the resulting experiment

# (default is to just include the root run)

load_child_runs=True,

# Name of the experiment so we can apply evalautors to it after

test_project_name=baseline_experiment_name

)

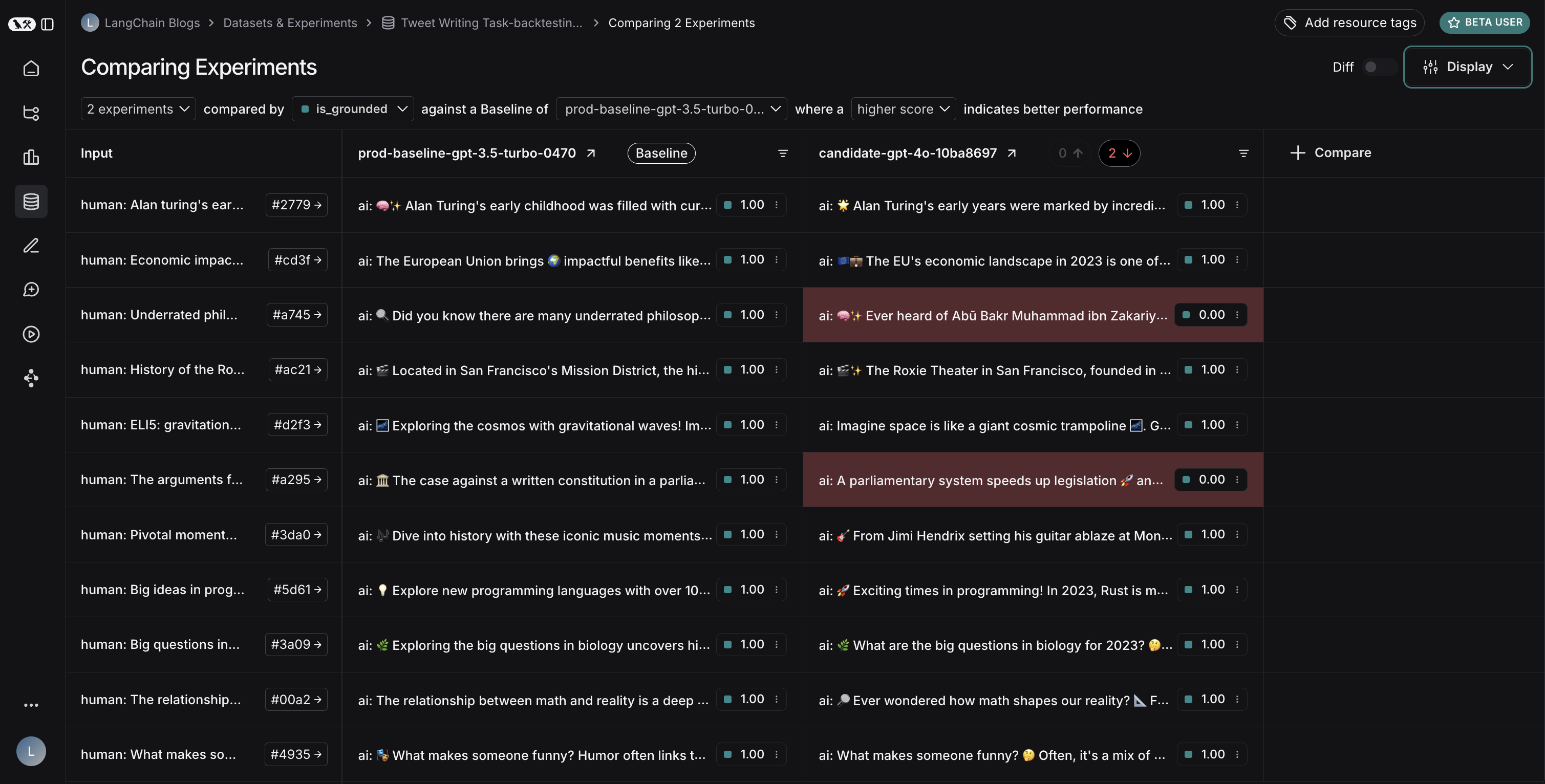

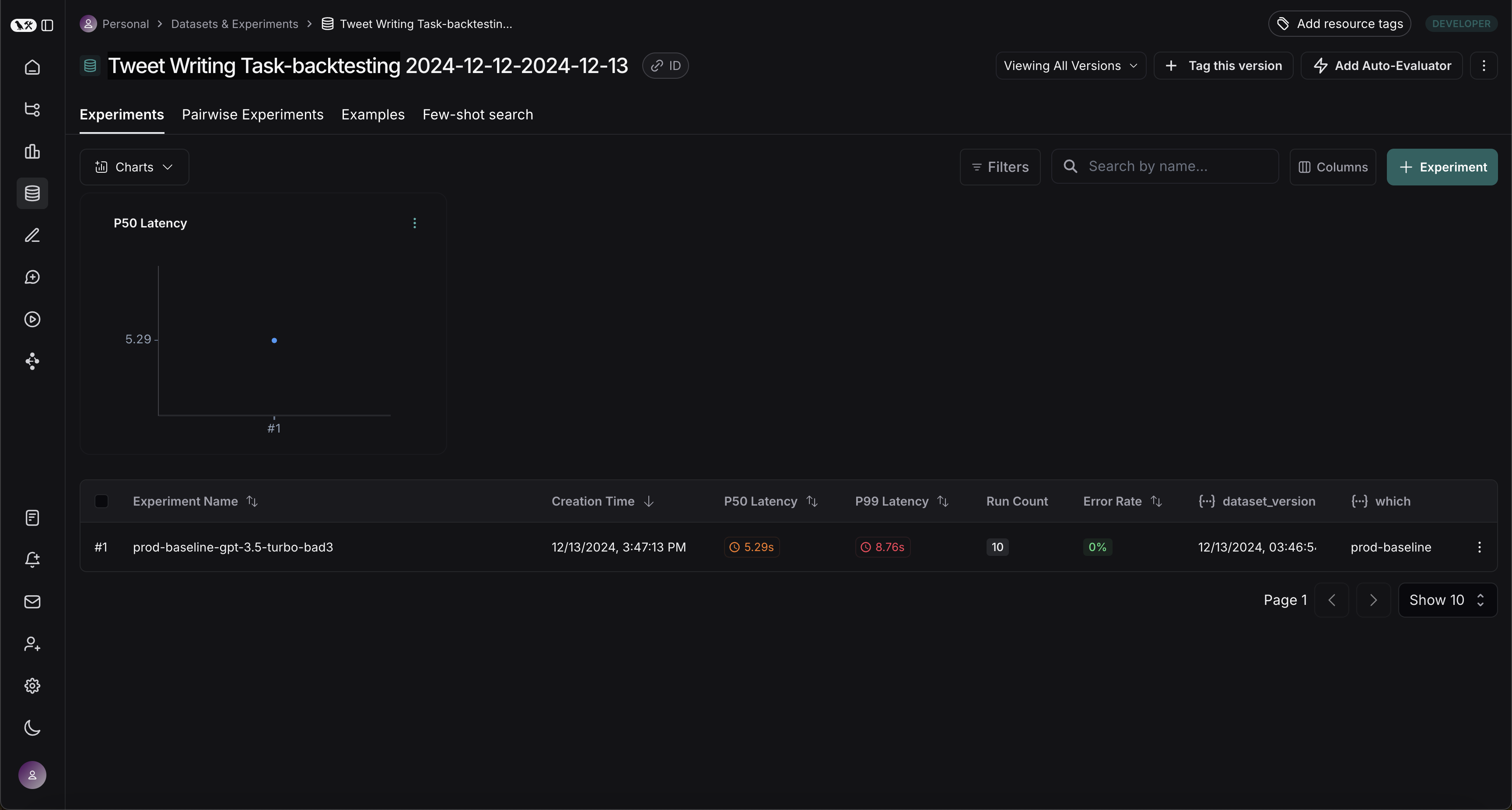

完成此步骤后,您将在 LangSmith 项目中看到一个名为“推文撰写任务回溯测试 今日日期”的新数据集,其中包含一个实验,如下所示:

与新系统进行基准测试

现在我们可以开始将生产运行与新系统进行基准测试的过程。

定义评估器

首先,让我们定义用于比较这两个系统的评估器。请注意,我们没有参考输出,因此需要提出仅需要实际输出的评估指标。

import emoji

from pydantic import BaseModel, Field

from langchain_core.messages import convert_to_openai_messages

class Grade(BaseModel):

"""Grade whether a response is supported by some context."""

grounded: bool = Field(..., description="Is the majority of the response supported by the retrieved context?")

grounded_instructions = f"""You have given somebody some contextual information and asked them to write a statement grounded in that context.

Grade whether their response is fully supported by the context you have provided. \

If any meaningful part of their statement is not backed up directly by the context you provided, then their response is not grounded. \

Otherwise it is grounded."""

grounded_model = init_chat_model(model="gpt-4o").with_structured_output(Grade)

def lt_280_chars(outputs: dict) -> bool:

messages = convert_to_openai_messages(outputs["messages"])

return len(messages[-1]['content']) <= 280

def gte_3_emojis(outputs: dict) -> bool:

messages = convert_to_openai_messages(outputs["messages"])

return len(emoji.emoji_list(messages[-1]['content'])) >= 3

async def is_grounded(outputs: dict) -> bool:

context = ""

messages = convert_to_openai_messages(outputs["messages"])

for message in messages:

if message["role"] == "tool":

# Tool message outputs are the results returned from the Tavily/DuckDuckGo tool

context += "\n\n" + message["content"]

tweet = messages[-1]["content"]

user = f"""CONTEXT PROVIDED:

{context}

RESPONSE GIVEN:

{tweet}"""

grade = await grounded_model.ainvoke([

{"role": "system", "content": grounded_instructions},

{"role": "user", "content": user}

])

return grade.grounded

评估基线

现在,让我们针对基线实验运行评估器。

baseline_results = await client.aevaluate(

baseline_experiment_name,

evaluators=[lt_280_chars, gte_3_emojis, is_grounded],

)

# If you have pandas installed can easily explore results as df:

# baseline_results.to_pandas()

定义并评估新系统

现在,让我们定义并评估我们的新系统。在此示例中,我们的新系统将与旧系统相同,但会使用 GPT-4o 而非 GPT-3.5。由于我们的模型是可配置的,我们只需更新传递给代理的默认配置即可。

candidate_results = await client.aevaluate(

agent.with_config(model="gpt-4o"),

data=dataset_name,

evaluators=[lt_280_chars, gte_3_emojis, is_grounded],

experiment_prefix="candidate-gpt-4o",

)

# If you have pandas installed can easily explore results as df:

# candidate_results.to_pandas()

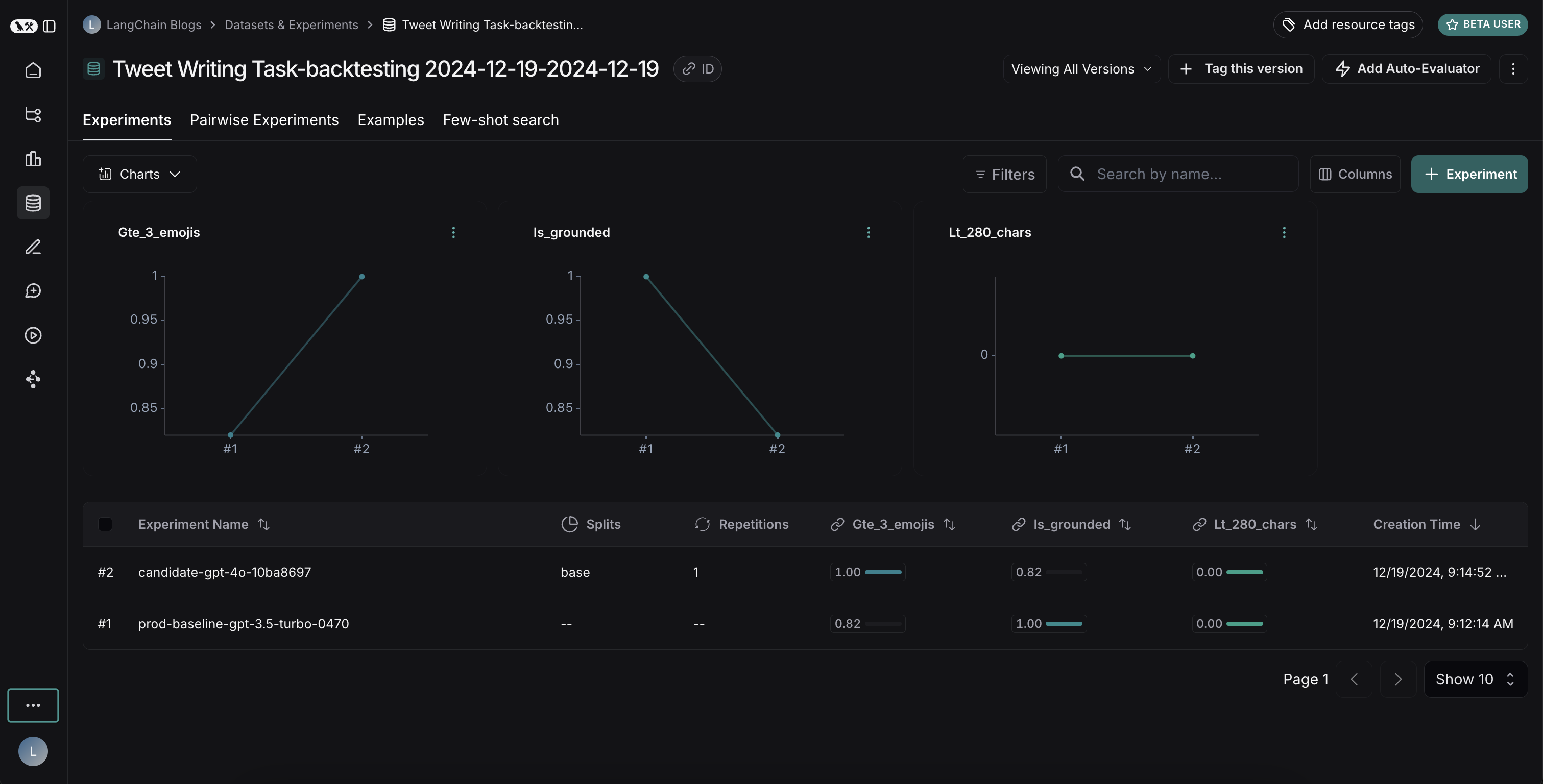

比较结果

运行完这两个实验后,您可以在数据集中查看它们。

结果揭示了两种模型之间一个有趣的权衡。

- GPT-4o 在遵循格式规则方面表现出更高的性能,始终包含所需数量的表情符号。

- 然而,GPT-4o 在根据提供的搜索结果进行事实判断方面可靠性较低。

为了说明这种事实判断问题:在这个示例运行中,GPT-4o 包含了关于阿布·伯克尔·穆罕默德·伊本·扎卡里亚·拉齐的医学贡献的事实,这些事实并未出现在搜索结果中。这表明它是在利用其内部知识,而不是严格使用所提供的信息。

这项回溯测试练习表明,尽管 GPT-4o 通常被认为是一个更强大的模型,但仅仅升级到它并不能改进我们的推文撰写器。要有效使用 GPT-4o,我们需要:

- 完善我们的提示,更强调仅使用所提供的信息

- 或者修改我们的系统架构以更好地约束模型的输出

这一见解展示了回溯测试的价值——它帮助我们在部署前识别了潜在问题。